“We stand on the cusp of a transformative era in artificial intelligence at Google, declares CEO Sundar Pichai – the dawn of the Gemini era. Gemini, Google’s cutting-edge large language model, was initially hinted at by Pichai during the I/O developer conference in June and has now been unveiled to the public. According to Pichai and Google DeepMind CEO Demis Hassabis, Gemini marks a substantial advancement in AI models, poised to significantly impact virtually all of Google’s product offerings. Pichai emphasizes the potency of this moment, stating, ‘One of the remarkable aspects is the ability to enhance a foundational technology, and immediately witness its positive influence across all our products.”

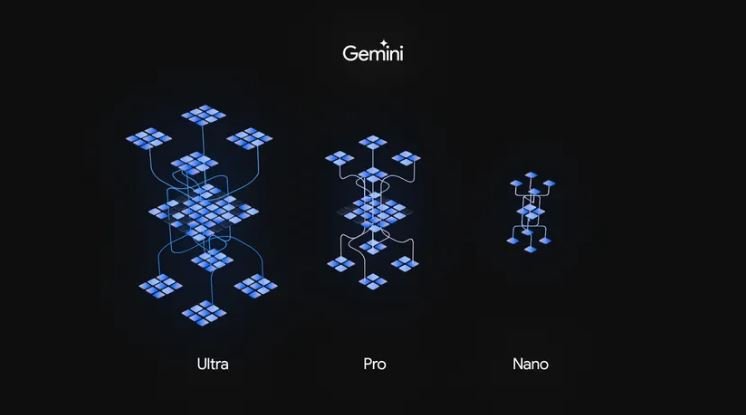

Gemini transcends the boundaries of a singular AI model, presenting a multifaceted approach to artificial intelligence. Within this spectrum, there exists a nimble iteration known as Gemini Nano, strategically designed for seamless native and offline operation on Android devices. Complementing it is the robust Gemini Pro, set to power numerous Google AI services and forming the foundation of Bard. At the zenith of capability lies Gemini Ultra, representing the most potent Large Language Model (LLM) ever crafted by Google, tailored for data centres and enterprise applications.

The unveiling of Gemini unfolds through various channels: Bard now harnesses the prowess of Gemini Pro, introducing enhanced features for Pixel 8 Pro users through Gemini Nano (with Gemini Ultra slated for next year). Starting December 13th, developers and enterprise clients can access Gemini Pro via Google Generative AI Studio or Vertex AI in Google Cloud. While currently available only in English, Pichai affirms that additional language support is on the horizon. The trajectory for Gemini extends beyond its current applications, with plans to integrate it into Google’s search engine, advertising products, the Chrome browser, and beyond, promising a transformative impact on a global scale. Indeed, the future of Google has arrived precisely when it is needed most.

A year and a week ago, OpenAI introduced ChatGPT, propelling itself to the forefront of the AI landscape. Google, a pioneer in AI technology, known for labelling itself an “AI-first” organisation for nearly a decade, found itself caught off guard by the rapid ascendancy of OpenAI’s ChatGPT. Now, Google is gearing up for a showdown with its latest offering, Gemini, pitting it against OpenAI’s GPT-4.

The burning question emerges: GPT-4 or Gemini? Google’s CEO, Demis Hassabis, reveals that the company has conducted an exhaustive analysis, subjecting both systems to comprehensive benchmarking. Thirty-two established benchmarks were employed, ranging from overarching assessments like the Multi-task Language Understanding benchmark to more specific evaluations, such as comparing the models’ proficiency in generating Python code. Hassabis proudly asserts, “I think we’re substantially ahead on 30 out of 32” benchmarks, a confident smile playing on his lips. The competitiveness of these benchmarks, however, varies from narrow to expansive scopes.

Google asserts that Gemini outperforms GPT-4 in 30 out of 32 benchmarks, showcasing its superiority across a range of performance metrics

In the realm of benchmarks, where distinctions are often subtle, Gemini’s standout strength lies in its adeptness at comprehending and engaging with video and audio content. This capability is not accidental; it’s an integral part of the Gemini strategy, with multimodality embedded in its core design. In contrast to OpenAI’s approach with separate models for images (DALL-E) and voice (Whisper), Google has developed a unified multisensory model from the outset. Hassabis underscores their interest in creating highly versatile systems, emphasizing a holistic approach to amalgamating diverse modes—harvesting extensive data from various inputs and senses to deliver responses with rich variability. The battle between GPT-4 and Gemini unfolds not only in the benchmarks but also in the strategic choices defining the future landscape of AI.

Currently, the fundamental capabilities of Gemini are centered around processing text input and generating text output. However, as we ascend the hierarchy of models, Gemini Ultra, for instance, exhibits the capacity to engage with a broader spectrum of data types, including images, video, and audio. Demis Hassabis, CEO of Google, anticipates an evolution towards even greater generality, incorporating dimensions such as action and touch—essentially delving into robotics-oriented functionalities.

These models exhibit an enhanced understanding of the world around them

In conversations with Pichai and Hassabis, it becomes evident that the launch of Gemini marks not only the initiation of a broader project but also a significant leap forward in itself. Gemini represents the culmination of Google’s long-standing ambitions and efforts, potentially the model the company had been striving to develop for years – possibly one that should have been in place before OpenAI and ChatGPT dominated the AI landscape.

Google, having declared a “code red” following ChatGPT’s debut and being perceived as playing catch-up, is determined to adhere to its “bold and responsible” mantra. Both Hassabis and Pichai express a reluctance to rush progress merely to keep pace, especially as the quest for artificial general intelligence (AGI) draws closer. AGI, an AI surpassing human intelligence and capable of self-improvement, represents the ultimate goal, and caution is paramount in approaching this transformative technology. Hassabis emphasises the need for a cautious yet optimistic approach as AGI unfolds.

Ensuring the safety and responsibility of Gemini has been a top priority for Google, with rigorous internal and external testing, as well as red-teaming efforts. Pichai highlights the significance of data security and reliability, particularly for enterprise-focused products where generative AI plays a pivotal role. However, Hassabis acknowledges the inherent risks in launching a cutting-edge AI system, recognizing that unforeseen issues and attack vectors may emerge. He stresses the importance of releasing and learning from these situations, characterising the Ultra release as a carefully managed beta, providing a controlled experimentation zone for Google’s most capable model. Essentially, Google aims to uncover potential challenges and issues before users do.

For years, Pichai and other Google executives have extolled the transformative potential of AI, with Pichai likening it to being more impactful than fire or electricity. While the initial impact of the Gemini model may not revolutionize the world, the best-case scenario is that it positions Google to compete more effectively with OpenAI in the race to develop exceptional generative AI. There’s a sense of anticipation at Google, with Pichai, Hassabis, and others envisioning Gemini as the inception of something truly monumental. If the web made Google a tech giant, Gemini holds the potential to be even more profound in its impact.